EHS Audits – Have We Lost Our Way? Part III

Mar 12th, 2013 | By Lawrence Cahill Robert Costello | Category: Auditing

On July 11, 2010, an article was published in the EHS Journal titled “EHS Audits – Have We Lost Our Way?” That article was followed a year later by a sequel that explored the topic more fully. The articles elicited numerous thoughtful comments and a lot of general discussion. The premise of the original articles was that perhaps environmental, health, and safety (EHS) audit programs have evolved, particularly in the United States, into mere checks of administrative requirements and not evaluations of risk. As a consequence, some people are coming to believe that EHS audits are not as value-added as they could be.

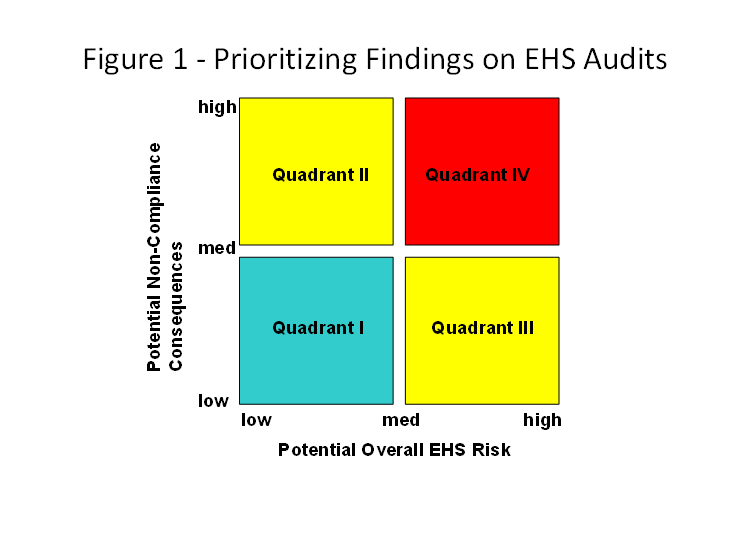

In the second article, we proposed a methodology that could determine if a given EHS audit was focused on the right things. In essence, each audit finding would be given a score ranging from one to ten based on two different factors: the potential consequences of the non-compliance and the potential overall risks posed by the finding. The scores of the individual findings would then be plotted on a two-by-two grid, much like the one presented in Figure 1. A more detailed discussion of the methodology and examples of typical findings that might be placed in each of the quadrants can be found in the 2011 article.

In 2011 and 2012, we tested this methodology on more than a dozen audits, both in the U.S. and in other countries. The results were quite interesting if not conclusive. This article discusses some of the more interesting outcomes.

Creating a Theory for the Expected Outcome

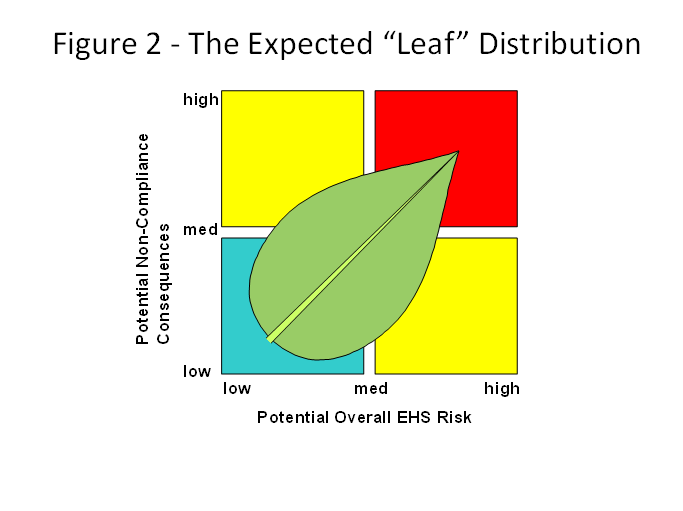

We thought long and hard about what might be an ideal distribution of audit findings and came up with the “leaf” distribution shown in Figure 2. This distribution would apply mainly to a mature audit program where sites have been through one or more audit cycles. Described below is the rationale for the theory. It might be an over-simplification, but our view is that simple is better.

First, we didn’t think that there should be a concentration of findings in the truly low/low category. A large number of findings in this area (the lower left corner of Quadrant I) would imply that the audit team identified a significant number of minor, administrative deficiencies. Although they might indeed exist at a given site, an audit report is usually not the best place to record them. Many companies classify these as “local attention only” findings and do not include them in the audit report because they can create unnecessary “noise” when management reviews and acts upon the report findings. For this reason, the leaf is not positioned at the low/low intersection but moved up and to the right.

Second, as one moves up both axes we would expect to see a fanning out of the distribution in both directions. This would indicate the auditors are focusing on both regulatory compliance and relative risks.

Third, the distribution would hopefully taper off in Quadrant IV (particularly for more mature audit programs) because it is unlikely that there would be many high risk, high compliance consequence findings. At least, one would hope not.

Using the Excel® “Bubble Chart” Application

We found that simply plotting the points on a simple scattergram might obscure the fact that there were numerous findings with the same score. A better solution was to use the “bubble chart” application found in Excel®, which better shows the findings distribution.

The following guidance applies to data portrayed on a bubble chart:

- Each audit finding has been given a compliance score of 1 to 10 and a risk score of 1 to 10.

- The size of the bubble represents the number of findings with that particular score (e.g., 4,3). The larger the bubble, the greater the number of findings.

- The white star represents the average score for all the findings.

- The varying colors are only for visual effect.

- Scores ranging from 1 to 5 are considered to be in the lower quadrant; those ranging from 6 to 10 are considered to be in the higher quadrant.

Four case studies are presented below.

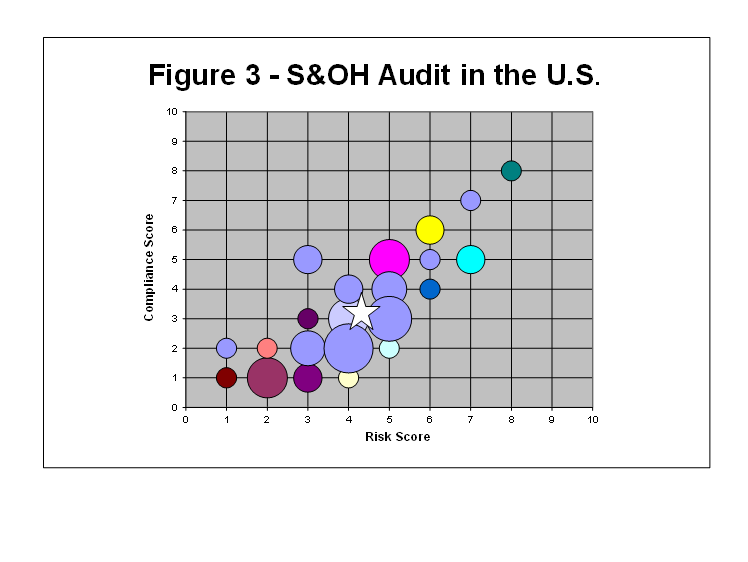

Case 1: A Safety and Health Audit in the U.S.

This audit of a major chemical manufacturing facility had 49 findings (Figure 3).

The largest circle represents 6 findings with the same score and the smallest circles represent 1 finding with the same score. The average score was 4.2 for risk and 3.2 for compliance with 14% of the findings in the low/low classification (1,1 to 2,2).

The resultant distribution is much like the leaf model. There are not too many low/low findings (14%) and the team appears to have focused on both regulatory compliance as well as risk, with the average risk score slightly higher than the average compliance score.

All in all, an audit well done.

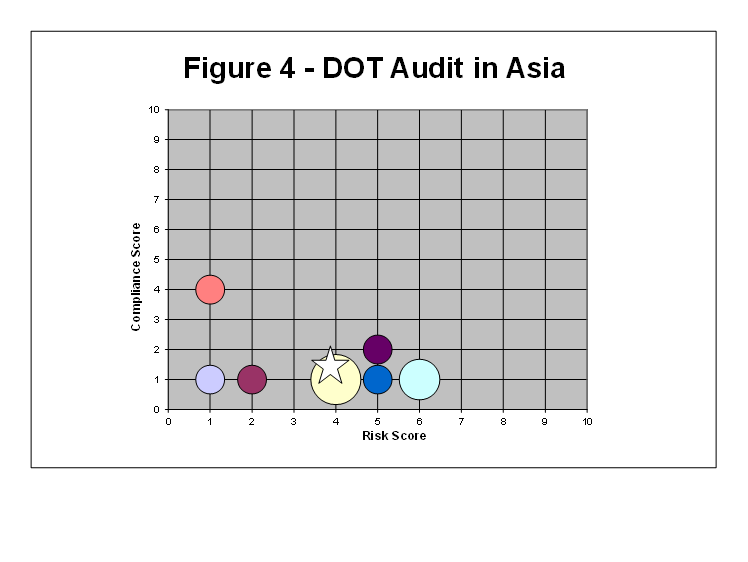

Case 2: A DOT Audit in Asia

This audit of a distribution center in Asia had 10 findings (Figure 4).

The largest circle represents 3 findings with the same score, and the smallest circles represent 1 finding with the same score. The average score was 3.8 for risk and 1.4 for compliance with 20% of the findings in the low/low classification (1,1 to 2,2).

There was only one finding with a compliance score above two, and 20% of the ten findings were in the low/low classification. It was not that surprising that there was only one finding in an upper quadrant given that it was a distribution center, but the lack of compliance findings was a bit surprising. The feedback given by the audit team was that there are very few if any distribution or transportation regulations in the country although this could not be confirmed. At least the analysis made us ask the question.

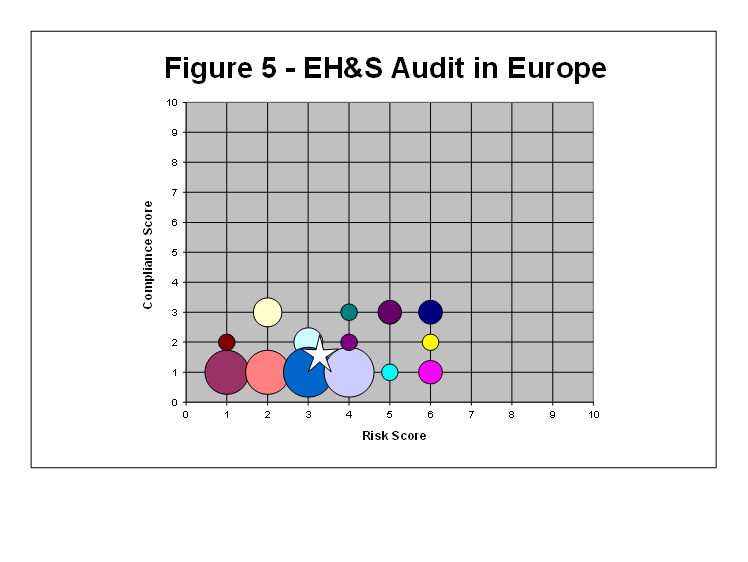

Case 3: An EH&S Audit in Europe

This audit of a major agricultural operation had 49 findings (Figure 5).

The largest circles represent 9 findings with the same score and the smallest circles represent 1 finding with the same score. The average score was 3.1 for risk and 1.4 for compliance with 31% of the findings in the low/low classification (1,1 to 2,2).

These audit results were very interesting. Note the very low average score for compliance (1.4) and the fact that 31% of the findings were in the low/low classification. It turned out that the audit team members could neither speak nor read the local language and did not research the country’s EHS regulations before the audit. Therefore, they focused on company standards and good management practices. The chart results made that approach pretty obvious. As a result, an action plan was put together for future audits to assure that audit teams had at least one team member who was fluent in the local language. The action plan also required that research on applicable local regulations (e.g., purchase of a country-specific audit protocol) be conducted in advance of each audit.

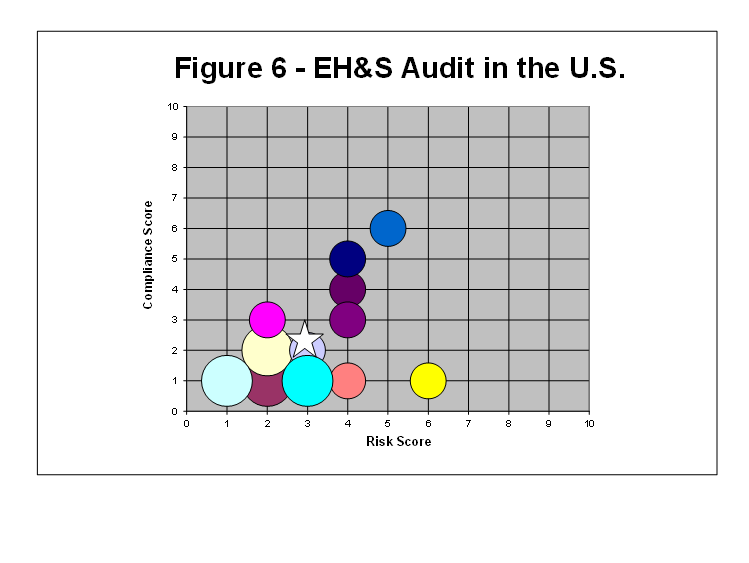

Case 4: An EH&S Audit in the U.S.

This audit of a seasonal manufacturing facility had 16 findings (Figure 6).

The largest circles represent 2 findings with the same score and the smallest circles represent 1 finding with the same score. The average score was 3.0 for risk and 2.2 for compliance with 38% of the findings in the low/low classification (1,1 to 2,2).

This audit resulted in a low compliance score (2.2.) and 38% of the findings in the low/low classification (1,1 to 2,2). Here, it turned out that the processing area was not operating at the time of the audit; the plant was essentially shut down. Thus, the vast majority of findings were in Quadrant I. Historically, in this company, seasonal operations were mostly audited in the off-season; the rationale being that “we’re just too busy to handle an EHS audit during our busy season.” That begs the question, of course, wouldn’t you want to audit the site when activity is at its peak? In this case, the corrective action included modifying the audit schedule so that these types of operations were not always audited at the same time of year in each audit cycle.

Conclusion

Using a methodology similar to the one described in this article can help to improve the value of an EHS audit program. For the cases discussed in this article, the analysis got to the root of some very important questions:

Are we too heavily focused on minor administrative requirements?

- Do our auditors have the compliance tools and language skills to conduct audits in a variety of different countries?

- Are we visiting the sites at the best time of year to assure operations are active?

There are numerous other audit program issues that a findings risk analysis can uncover. Take a good, long, hard look at your audit program. Are you getting both what you want and what you need?

Related Articles by Lawrence Cahill and Robert Costello

- Classic Auditor Failures (Cahill and Costello)

- Repeat Versus Recurring Findings in EHS Audits (Cahill and Costello)

- Lessons Learned on International Assignments (Cahill)

- Environmental Audits Versus Health and Safety Audits (Cahill and Costello)

- Using Risk Factors to Determine EHS Audit Frequency (Cahill)

- Measuring the Success of an EHS Audit Program (Cahill)

- Statistically Representative Sampling on EH&S Audits: Expectations Established by Third Parties (Cahill)

- Outsourcing EHS Audits: Does it Make Sense? (Cahill)

- EHS Audits – Have We Lost Our Way? (Cahill)

- EHS Audits – Have We Lost Our Way? A Sequel (Cahill and Costello)

About the Authors

Lawrence B. Cahill, CPEA (Master Certification) is a Technical Director at Environmental Resources Management in Exton, Pennsylvania, U.S.A. He has over 30 years of professional EHS experience with industry and consulting. He is the editor and principal author of the widely used text, Environmental, Health and Safety Audits, published by Government Institutes, Inc., and now in its 9th Edition. He has published over 60 articles and has been quoted in numerous publications including the New York Times and the Wall Street Journal. Mr. Cahill has worked in over 25 countries during his career. He holds a B.S. in Mechanical Engineering fromNortheasternUniversity, an M.S. in Environmental Health Engineering fromNorthwesternUniversity, and an MBA from theWhartonSchool of theUniversity ofPennsylvania.

Robert J. Costello, PE, Esq., CPEA is a Partner with Environmental Resources Management in Exton, Pennsylvania, U.S.A. He has over 17 years of professional environmental resource management and consulting experience. Mr. Costello manages global regulatory compliance, management system, and sustainability assurance programs and participates personally on-site in typically 30+ audits and assessments per year. He holds a B.S. in Environmental Engineering fromWilkesUniversity, an M.S. in Environmental Engineering fromSyracuseUniversity, and a J.D. fromSyracuseUniversity. Mr. Costello is admitted to the bar inPennsylvania, is a licensed professional engineer inPennsylvania andDelaware, and is a Certified Professional Environmental Auditor.

Photograph: Day Window by Ilco, Izmir, Turkey.

Return to the EHS Journal Home Page

Excellent article! Our firm is presently involved in developing a similar approach based on risk and financial implications. I’ll look forward to reading more of this work. Thank you!

Very interesting article. We have been using a similar approach for the last 4 years, especially for multi-site and/or multi year audit programmes, in which each finding is is allocated a potential severity score and likelihood score whic is laos used to calculation a risk ranking for it.

Over the years, we have enhanced our methodolgy to incude the ability to provide overal site ranking in order to track year on year progress but also to build a risk profile for the sites across the client’s business.

Although the risk profiling is importnt, we also rlate findings to programme area to identify systemic issues so the client can target those areas to prevent same or similar future issues ocurring - threat the root caus9s) rather than the symptom(s) increases the value of auditing.

The bubble chart looks to be a interesting visual represtation of the information.